Hi there.

I am the technical artist of this project, chiming in with some technical tidbits.

Interior lighting system

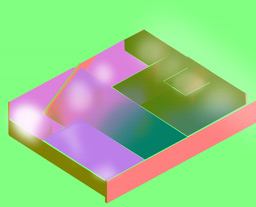

tl;dr: this scene has no in-engine lights. All lighting is computed from:

- RGB neutral render with paintover

- BW shade/light multiplier map encoded into albedo map’s alpha channel

- RGB light direction map

- global sun/shade color variables for each time of day

Additive shade map didn’t work out because it tended to have inverted color information in dark areas (pretty obvious in hindsight, duh). Converting additive map into BW resulted in super-saturated dark areas (pretty obvious in hindsight, duh).

Multiplicative light/shade map seemed to be acting nicely if it weren’t for the 0-to-1 clamp of the PNG format. Let’s not go into minifloats or EXR for now.

Enter the arctangent encoding

You take a value from 0 to infinity and arctan() it to squeeze it into the 0-1 range. You take the map in engine and do a quick tan() before multiplying it onto your map. You have high resolution near 0 and degrading accuracy towards infinity which hasn’t been detectable by eye as of yet.

Lightfield map

This (currently hand-crafted) map describes the direction and magnitude of a single ray of light in each point of a 2D space. So technically a very very simple lightfield wannabe. This is a vague, efficient and apparently good-enough description of which direction the natural light is coming from. This technique relies on lighting conditions being very low frequency and similar on and behind the objects and characters.

The white blobbing you see there is alpha channel we are using to map out faux indirect illumination where low alpha values near the windows result in a strongly directional light with stronger shades/shadows whereas high alpha values mean light bouncing around more. So the shaders wrap more light around objects and lighten up shade/shadows a bit.

Highlighting of interactables

This was apparently a headache and a half! I consider myself pretty knowledgeable in common game graphics techniques but it came as a surprise that apparently finding the outlines of 3d objects is a relatively difficult task. Shows what I know. I’m told it’s done by enlarging 3d objects along the normals? We ended up using masks and edge detection for various reasons but that’s another post by kuubaas waiting to happen.

As we witnessed while testing, the normal-extruded outline method is and looks cheap.

For now I’ll just say we use shader replacement to render a mask RenderTexture which is then edge-detected. For silhouette outlines it’s less hassle, more reliable and looks better than any geometry-based solution I’ve seen. (you also get free AA with edge detection, yay).

It’s quite a plain vanilla solution but the colouring technique could be interesting to write further about. Will do a post this month.